Search the Community

Showing results for tags 'Autopilot'.

-

2017 NHTSA Report that Tesla Autopilot Cut Crashes 40% Called "Bogus"

Drew Dowdell posted an article in Tesla

Back in 2017, the NHTSA released a report on the safety of Tesla's Autopilot system after the fatal crash of a Tesla owner in 2016. That report claimed that the use of Autopilot, or more precisely the lane-keeping function called Autosteer, reduced crash rates by 40%. In that original crash, the owner repeatedly ignored warnings to resume manual control of the vehicle. Critics questioned whether Autopilot was encouraging drivers to pay less attention to the road. The NHTSA report appeared to put those concerns to rest. Later, when a second driver died in an Autopilot related accident, Tesla CEO Elon Musk pointed to the NHTSA study and the 40% increase in safety claim. Now, 2 years after the original report. According to a report by Arstechnica, a third party has analyzed the data and found the 40% claim to be bogus. Originally the NHTSA data on Autopilot crashes was not publically available when Quality Control Systems, a research and consulting firm, requested it under a Freedom of Information Act request. The NHTSA claimed the data from Tesla was confidential and would cause the company harm if released. QCS sued the NHTSA and in September of last year, a federal judge granted the FOI request. What QCS found was that missing data and poor math caused the NHTSA report to be grossly inaccurate. The period in question covered vehicle both before and after Autopilot was installed, however, a significant number of the vehicles in the data set provided by Telsa have large gaps between the last recorded mileage before Autopilot was installed and the first recorded mileage after installation. The result is a gray area where it is unknown if Autopilot was active or not. In spite of this deficiency, the NHTSA used the data anyway. In the data provided only 5,714 vehicles have no gap between the pre and post Autopilot mileage readings. When QCS ran calculations again, they found that crashes per mile actually increased 59% after Autopilot was installed. Does that mean that a Tesla using Autopilot makes a crash 59% more likely? The answer to that is no for a number of reasons. First is that the sample size QCS had to work with is a very small percentage of Tesla’s total sales. Secondly, the data is only representative of vehicles with version 1 of Tesla’s Autopilot, a version that Tesla hasn’t sold since 2016. Tesla stopped quoting the NHTSA report around May of 2018, possibly realizing something was fishy with the data. They have since taken to their own report stating that cars with Autopilot engaged have fewer accidents per mile than cars without it engaged. This has some statistical fishiness to it as well. Autopilot is only meant to be engaged on the highway and due to the higher rate of speed all vehicles have a lower rate of accidents per mile. We may just have to wait until more data is available to find out if Tesla Autopilot and systems similar to it make crashed that much less likely. -

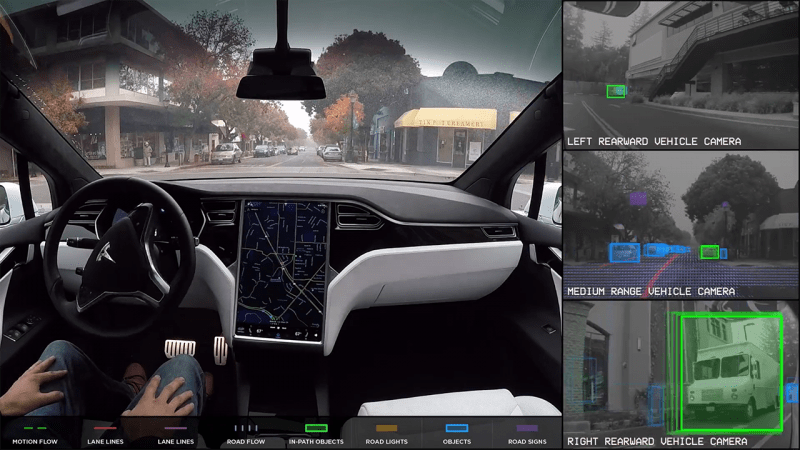

Back in 2017, the NHTSA released a report on the safety of Tesla's Autopilot system after the fatal crash of a Tesla owner in 2016. That report claimed that the use of Autopilot, or more precisely the lane-keeping function called Autosteer, reduced crash rates by 40%. In that original crash, the owner repeatedly ignored warnings to resume manual control of the vehicle. Critics questioned whether Autopilot was encouraging drivers to pay less attention to the road. The NHTSA report appeared to put those concerns to rest. Later, when a second driver died in an Autopilot related accident, Tesla CEO Elon Musk pointed to the NHTSA study and the 40% increase in safety claim. Now, 2 years after the original report. According to a report by Arstechnica, a third party has analyzed the data and found the 40% claim to be bogus. Originally the NHTSA data on Autopilot crashes was not publically available when Quality Control Systems, a research and consulting firm, requested it under a Freedom of Information Act request. The NHTSA claimed the data from Tesla was confidential and would cause the company harm if released. QCS sued the NHTSA and in September of last year, a federal judge granted the FOI request. What QCS found was that missing data and poor math caused the NHTSA report to be grossly inaccurate. The period in question covered vehicle both before and after Autopilot was installed, however, a significant number of the vehicles in the data set provided by Telsa have large gaps between the last recorded mileage before Autopilot was installed and the first recorded mileage after installation. The result is a gray area where it is unknown if Autopilot was active or not. In spite of this deficiency, the NHTSA used the data anyway. In the data provided only 5,714 vehicles have no gap between the pre and post Autopilot mileage readings. When QCS ran calculations again, they found that crashes per mile actually increased 59% after Autopilot was installed. Does that mean that a Tesla using Autopilot makes a crash 59% more likely? The answer to that is no for a number of reasons. First is that the sample size QCS had to work with is a very small percentage of Tesla’s total sales. Secondly, the data is only representative of vehicles with version 1 of Tesla’s Autopilot, a version that Tesla hasn’t sold since 2016. Tesla stopped quoting the NHTSA report around May of 2018, possibly realizing something was fishy with the data. They have since taken to their own report stating that cars with Autopilot engaged have fewer accidents per mile than cars without it engaged. This has some statistical fishiness to it as well. Autopilot is only meant to be engaged on the highway and due to the higher rate of speed all vehicles have a lower rate of accidents per mile. We may just have to wait until more data is available to find out if Tesla Autopilot and systems similar to it make crashed that much less likely. View full article

-

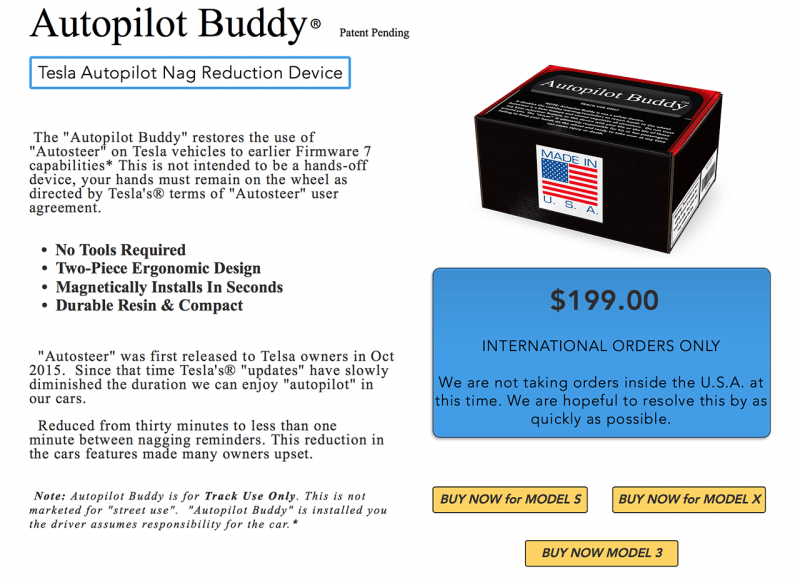

The National Highway Traffic Safety Administration has sent a cease-and-desist letter to Dolder, Falco and Reese Partners LLC, the company behind an aftermarket device called the Autopilot Buddy. Autopilot Buddy is a small, weighted device that clips onto either side of the wheel to place minor amounts of torque. This fools the Autopilot system into thinking that a driver has their hands on the wheel. The company markets the device as "nag reduction device", reducing the amount of warnings to tell driver to keep their hands on the wheel. The company has a disclaimer on Autopilot Buddy that states, This would be ok if a video demonstrating the product didn't appear to be on a public road of sorts. “A product intended to circumvent motor vehicle safety and driver attentiveness is unacceptable. By preventing the safety system from warning the driver to return their hands to the wheel, this product disables an important safeguard, and could put customers and other road users at risk,” said NHTSA Deputy Administrator Heidi King in a statement. NHTSA has given Dolder, Falco and Reese Partners LLC till June 29th to respond and certify to NHTSA "that all U.S. marketing, sales, and distribution of the Autopilot Buddy has ended." Source: Roadshow, NHTSA View full article

- 2 replies

-

- autopilot

- cease and desist

-

(and 2 more)

Tagged with:

-

NHTSA Issues A Cease and Desist To the Autopilot Buddy

William Maley posted an article in Automotive Industry

The National Highway Traffic Safety Administration has sent a cease-and-desist letter to Dolder, Falco and Reese Partners LLC, the company behind an aftermarket device called the Autopilot Buddy. Autopilot Buddy is a small, weighted device that clips onto either side of the wheel to place minor amounts of torque. This fools the Autopilot system into thinking that a driver has their hands on the wheel. The company markets the device as "nag reduction device", reducing the amount of warnings to tell driver to keep their hands on the wheel. The company has a disclaimer on Autopilot Buddy that states, This would be ok if a video demonstrating the product didn't appear to be on a public road of sorts. “A product intended to circumvent motor vehicle safety and driver attentiveness is unacceptable. By preventing the safety system from warning the driver to return their hands to the wheel, this product disables an important safeguard, and could put customers and other road users at risk,” said NHTSA Deputy Administrator Heidi King in a statement. NHTSA has given Dolder, Falco and Reese Partners LLC till June 29th to respond and certify to NHTSA "that all U.S. marketing, sales, and distribution of the Autopilot Buddy has ended." Source: Roadshow, NHTSA- 2 comments

-

- autopilot

- cease and desist

-

(and 2 more)

Tagged with:

-

Rumorpile: Tesla's Executives Canned Autopilot Safeguards Due to Costs

William Maley posted an article in Tesla

A new report from the Wall Street Journal reports that engineers at Tesla wanted to put more safeguards for Autopilot to keep drivers attentive to the road, but were rejected by executives. This has been concern within Tesla since the launch of Autopilot and only intensified in 2016 when a Model S crashed into a trailer in Florida. The driver was killed and an investigation revealed that Autopilot was on at the time. After the crash, Tesla brought in suppliers to talk about possible ways to keep a driver's attention on the road. Two ideas mentioned in the report included, Tracking the eyes of a driver to make sure they are watching the road. This would use a camera and infrared sensors. Cadillac uses something similar with their SuperCruise system. Incorporating sensors into the steering wheel to monitor whether a driver's hands were on the wheel. Autopilot already has a sensor to monitor small movements of the wheel and will issue a warning to the driver if it doesn't detect any movement. The downside is that the driver can quickly touch the wheel to stop the warnings for a few moments. However, the ideas were thrown out by Tesla executives (including CEO Elon Musk) due to costs and concerns that the technologies would be ineffective or annoy drivers. “It came down to cost, and Elon was confident we wouldn’t need it,” a source told the Wall Street Journal. A key issue with Autopilot is the false sense of confidence that drivers give to it - see the number of near-miss videos on Youtube as evidence. Tesla says in their owner manuals Autopilot has limitations and drivers must agree to a screen when Autopilot has engaged, "that it is their responsibility to stay alert and maintain control." Musk admitted during Tesla's recent earnings call that complacency with Autopilot was an issue. “When there is a serious accident, it is almost always, in fact, maybe always the case, that it is an experienced user,” said Musk. “And the issue is...more one of complacency, like we get too used to it.” Source: Wall Street Journal (Subscription Required) -

A new report from the Wall Street Journal reports that engineers at Tesla wanted to put more safeguards for Autopilot to keep drivers attentive to the road, but were rejected by executives. This has been concern within Tesla since the launch of Autopilot and only intensified in 2016 when a Model S crashed into a trailer in Florida. The driver was killed and an investigation revealed that Autopilot was on at the time. After the crash, Tesla brought in suppliers to talk about possible ways to keep a driver's attention on the road. Two ideas mentioned in the report included, Tracking the eyes of a driver to make sure they are watching the road. This would use a camera and infrared sensors. Cadillac uses something similar with their SuperCruise system. Incorporating sensors into the steering wheel to monitor whether a driver's hands were on the wheel. Autopilot already has a sensor to monitor small movements of the wheel and will issue a warning to the driver if it doesn't detect any movement. The downside is that the driver can quickly touch the wheel to stop the warnings for a few moments. However, the ideas were thrown out by Tesla executives (including CEO Elon Musk) due to costs and concerns that the technologies would be ineffective or annoy drivers. “It came down to cost, and Elon was confident we wouldn’t need it,” a source told the Wall Street Journal. A key issue with Autopilot is the false sense of confidence that drivers give to it - see the number of near-miss videos on Youtube as evidence. Tesla says in their owner manuals Autopilot has limitations and drivers must agree to a screen when Autopilot has engaged, "that it is their responsibility to stay alert and maintain control." Musk admitted during Tesla's recent earnings call that complacency with Autopilot was an issue. “When there is a serious accident, it is almost always, in fact, maybe always the case, that it is an experienced user,” said Musk. “And the issue is...more one of complacency, like we get too used to it.” Source: Wall Street Journal (Subscription Required) View full article

-

There is no love lost between Tesla Motors and the former director of Autopilot. Bloomberg reports that the Silicon Valley automaker has sued Sterling Anderson over allegations of stealing confidential information about Autopilot and trying to recruit Tesla employees to his new venture. In the court filing, Tesla says Anderson began work on an autonomous-car venture, Aurora Innovation LLC back in summer when he was head of the Autopilot project. As the director of Autopilot, Anderson would have access to Tesla's semi-autonomous tech. He would leave Tesla in December. Anderson has been collaborating with the former head of Google’s self-driving car project, Chris Urmson. Tesla is seeking a court order barring Anderson from "any use of Tesla’s proprietary information related to autonomous driving." Tesla is also seeking an order banning Anderson and Aurora Innovation from recruiting Tesla employees and contractors for a year after Anderson’s termination date. “Tesla’s meritless lawsuit reveals both a startling paranoia and an unhealthy fear of competition. This abuse of the legal system is a malicious attempt to stifle a competitor and destroy personal reputations. Aurora looks forward to disproving these false allegations in court and to building a successful self-driving business,” Aurora Innovation LLC said in a statement yesterday. Source: Bloomberg

- 6 comments

-

- autopilot

- ex-director

- (and 4 more)

-

There is no love lost between Tesla Motors and the former director of Autopilot. Bloomberg reports that the Silicon Valley automaker has sued Sterling Anderson over allegations of stealing confidential information about Autopilot and trying to recruit Tesla employees to his new venture. In the court filing, Tesla says Anderson began work on an autonomous-car venture, Aurora Innovation LLC back in summer when he was head of the Autopilot project. As the director of Autopilot, Anderson would have access to Tesla's semi-autonomous tech. He would leave Tesla in December. Anderson has been collaborating with the former head of Google’s self-driving car project, Chris Urmson. Tesla is seeking a court order barring Anderson from "any use of Tesla’s proprietary information related to autonomous driving." Tesla is also seeking an order banning Anderson and Aurora Innovation from recruiting Tesla employees and contractors for a year after Anderson’s termination date. “Tesla’s meritless lawsuit reveals both a startling paranoia and an unhealthy fear of competition. This abuse of the legal system is a malicious attempt to stifle a competitor and destroy personal reputations. Aurora looks forward to disproving these false allegations in court and to building a successful self-driving business,” Aurora Innovation LLC said in a statement yesterday. Source: Bloomberg View full article

- 6 replies

-

- autopilot

- ex-director

- (and 4 more)

-

NHTSA Closes Investigation On Tesla Autopilot, Not Seeking Recall

William Maley posted an article in Tesla

Last May, Joshua Brown was killed in a crash when his Tesla Model S in Autopilot collided with a tractor-trailer. After an investigation that took over half of a year, the National Highway Traffic Safety Administration released their findings today. In a report, NHTSA said they didn't find any evidence of defects with the Autopilot system. The agency also stated that they would not ask Tesla to perform a recall on models equipped with Autopilot. In a statement, Tesla said "the safety of our customers comes first, and we appreciate the thoroughness of NHTSA’s report and its conclusion." NHTSA's report revealed that neither Autopilot nor Brown applied the brakes to prevent or lessen the impact of the crash. However, NHTSA cleared the Automatic Emergency Braking system as it's “designed to avoid or mitigate rear end collisions” but that “braking for crossing path collisions, such as that present in the Florida fatal crash, are outside the expected performance capabilities of the system.” Speaking of Brown, NHTSA's report said that he did not any action with steering or anything else to prevent this. The last recorded action in the vehicle was the cruise control being set to 74 mph. NHTSA notes that in their reconstruction of the crash, Brown had seven seconds to from seeing the tractor trailer to the moment of the impact, giving him possible chance to take some sort of action. This brings up a very serious concern of how much confidence owners give the Autopilot system. Despite Tesla having statements such as that Autopilot “is an assist feature that requires you to keep your hands on the steering wheel at all times," and that "you need to maintain control and responsibility for your vehicle” while using it," various videos showing Model Ss narrowly avoiding crashes have caused people to think that Autopilot was fully autonomous - which it isn't. “Although perhaps not as specific as it could be, Tesla has provided information about system limitations in the owner’s manuals, user interface and associated warnings/alerts, as well as a driver monitoring system that is intended to aid the driver in remaining engaged in the driving task at all times. Drivers should read all instructions and warnings provided in owner’s manuals for ADAS (advanced driver assistance systems) technologies and be aware of system limitations,” said NHTSA. Tesla, to its credit, has been updating Autopilot to make drivers pay attention when using it. These include increasing the warnings for a driver to intervene when needed, and turning off the system if a driver doesn't respond to repeated requests. Source: National Highway Traffic Safety Administration (Report in PDF), Tesla -

Last May, Joshua Brown was killed in a crash when his Tesla Model S in Autopilot collided with a tractor-trailer. After an investigation that took over half of a year, the National Highway Traffic Safety Administration released their findings today. In a report, NHTSA said they didn't find any evidence of defects with the Autopilot system. The agency also stated that they would not ask Tesla to perform a recall on models equipped with Autopilot. In a statement, Tesla said "the safety of our customers comes first, and we appreciate the thoroughness of NHTSA’s report and its conclusion." NHTSA's report revealed that neither Autopilot nor Brown applied the brakes to prevent or lessen the impact of the crash. However, NHTSA cleared the Automatic Emergency Braking system as it's “designed to avoid or mitigate rear end collisions” but that “braking for crossing path collisions, such as that present in the Florida fatal crash, are outside the expected performance capabilities of the system.” Speaking of Brown, NHTSA's report said that he did not any action with steering or anything else to prevent this. The last recorded action in the vehicle was the cruise control being set to 74 mph. NHTSA notes that in their reconstruction of the crash, Brown had seven seconds to from seeing the tractor trailer to the moment of the impact, giving him possible chance to take some sort of action. This brings up a very serious concern of how much confidence owners give the Autopilot system. Despite Tesla having statements such as that Autopilot “is an assist feature that requires you to keep your hands on the steering wheel at all times," and that "you need to maintain control and responsibility for your vehicle” while using it," various videos showing Model Ss narrowly avoiding crashes have caused people to think that Autopilot was fully autonomous - which it isn't. “Although perhaps not as specific as it could be, Tesla has provided information about system limitations in the owner’s manuals, user interface and associated warnings/alerts, as well as a driver monitoring system that is intended to aid the driver in remaining engaged in the driving task at all times. Drivers should read all instructions and warnings provided in owner’s manuals for ADAS (advanced driver assistance systems) technologies and be aware of system limitations,” said NHTSA. Tesla, to its credit, has been updating Autopilot to make drivers pay attention when using it. These include increasing the warnings for a driver to intervene when needed, and turning off the system if a driver doesn't respond to repeated requests. Source: National Highway Traffic Safety Administration (Report in PDF), Tesla View full article

-

The National Transportation Safety Board (NTSB) has issued their preliminary report on the fatal crash involving a Tesla Model S in Autopilot mode and a semi-truck back in May. According to data that was downloaded from the Model S, the vehicle was traveling above the speed limit on the road (74 mph in 65) and that Autopilot was engaged. The speed helps explain how the Model S traveled around 347 feet after making the impact with the trailer - traveling 297 feet before hitting a utility pole, and then going another 50 feet after breaking it. The report doesn't have any analysis of the accident or a possible cause. NTSB says their investigators are still downloading data from the vehicle and looking at information from the scene of the crash. A final report is expected within the next 12 months. Car and Driver reached out for comment from both Tesla and Mobileye - the company that provides Tesla the chips that process the images being captured by Autopilot's cameras. Tesla didn't respond, but a blog post announcing the crash said: "neither Autopilot nor the driver noticed the white side of the tractor trailer against a brightly lit sky, so the brake was not applied." A spokesman for Mobileye told the magazine that their chips aren't designed to flag something like the scenario that played out in the crash. “The design of our system that we provide to Tesla was not . . . it’s not in the spec to make a decision to tell the vehicle to do anything based on that left turn, that lateral turn across the path. Certainly, that’s a situation where we would hope to be able to get to the point where the vehicle can handle that, but it’s not there yet,” said Dan Galves, a Mobileye spokesperson. It should be noted that hours before NTSB released their report, Mobileye announced that it would end its relationship with Tesla once their current contract runs out. The Wall Street Journal reports that disagreements between the two on how the technology was deployed and the fatal crash caused the separation. “I think in a partnership, we need to be there on all aspects of how the technology is being used, and not simply providing technology and not being in control of how it is being used,” said Mobileye Chief Technical Officer Amnon Shashua during a call with analysts. “It’s very important given this accident…that companies would be very transparent about the limitations” of autonomous driving systems, said Shashua. “It’s not enough to tell the driver to be alert but to tell the driver why.” Recently, Mobileye announced a partnership with BMW and Intel in an attempt to get autonomous vehicles on the road by 2021. Source: NTSB, 2, Car and Driver, Wall Street Journal (Subscription Required) View full article

-

The National Transportation Safety Board (NTSB) has issued their preliminary report on the fatal crash involving a Tesla Model S in Autopilot mode and a semi-truck back in May. According to data that was downloaded from the Model S, the vehicle was traveling above the speed limit on the road (74 mph in 65) and that Autopilot was engaged. The speed helps explain how the Model S traveled around 347 feet after making the impact with the trailer - traveling 297 feet before hitting a utility pole, and then going another 50 feet after breaking it. The report doesn't have any analysis of the accident or a possible cause. NTSB says their investigators are still downloading data from the vehicle and looking at information from the scene of the crash. A final report is expected within the next 12 months. Car and Driver reached out for comment from both Tesla and Mobileye - the company that provides Tesla the chips that process the images being captured by Autopilot's cameras. Tesla didn't respond, but a blog post announcing the crash said: "neither Autopilot nor the driver noticed the white side of the tractor trailer against a brightly lit sky, so the brake was not applied." A spokesman for Mobileye told the magazine that their chips aren't designed to flag something like the scenario that played out in the crash. “The design of our system that we provide to Tesla was not . . . it’s not in the spec to make a decision to tell the vehicle to do anything based on that left turn, that lateral turn across the path. Certainly, that’s a situation where we would hope to be able to get to the point where the vehicle can handle that, but it’s not there yet,” said Dan Galves, a Mobileye spokesperson. It should be noted that hours before NTSB released their report, Mobileye announced that it would end its relationship with Tesla once their current contract runs out. The Wall Street Journal reports that disagreements between the two on how the technology was deployed and the fatal crash caused the separation. “I think in a partnership, we need to be there on all aspects of how the technology is being used, and not simply providing technology and not being in control of how it is being used,” said Mobileye Chief Technical Officer Amnon Shashua during a call with analysts. “It’s very important given this accident…that companies would be very transparent about the limitations” of autonomous driving systems, said Shashua. “It’s not enough to tell the driver to be alert but to tell the driver why.” Recently, Mobileye announced a partnership with BMW and Intel in an attempt to get autonomous vehicles on the road by 2021. Source: NTSB, 2, Car and Driver, Wall Street Journal (Subscription Required)

-

NHTSA Opens Investigation Into Fatal Crash With Tesla's Autopilot

William Maley posted an article in Tesla

The National Highway Transportation Safety Administration (NHTSA) has opened an investigation into a fatal crash dealing with Tesla's Autopilot system. In a statement given to Reuters, NHTSA said the driver of a 2015 Tesla Model S was killed while the vehicle was in the Autopilot mode. The crash took place on May 7th in Williston, Florida when a tractor-trailer was making a left turn across a divided highway. Tesla in a lengthy blog post said: "neither Autopilot nor the driver noticed the white side of the tractor trailer against a brightly lit sky, so the brake was not applied." The Model S drove underneath the trailer with the bottom making contact with the windshield. The Verge reports the driver was 40-year old Joshua Brown who filmed various videos of his Model S. One of the videos on his YouTube channel showed his Model avoiding an accident with a bucket truck. NHTSA's investigation will look the design and performance of the Model S and its various components, including Autopilot. It should be noted this is standard practice for NHTSA to investigate any crash where the vehicle's system could be at fault. Tesla's blog post says this is first known fatality in over 130 million miles since Autopilot was turned on. Autopilot has been a source of controversy since Tesla rolled it out last year. Numerous videos of Model S owners filming themselves in dangerous situations and sometimes showing the system not working caused Tesla to make some drastic changes. These included limiting the types of road the system could be turned on and making checks to see if there was someone sitting in the driver's seat. Tesla has said time and time again that Autopilot is a beta feature and that the driver needed to pay attention. "Autopilot is getting better all the time, but it is not perfect and still requires the driver to remain alert," Tesla said in their post. "It is important to note that Tesla disables Autopilot by default and requires explicit acknowledgement that the system is new technology and still in a public beta phase before it can be enabled. When drivers activate Autopilot, the acknowledgment box explains, among other things, that Autopilot “is an assist feature that requires you to keep your hands on the steering wheel at all times," and that "you need to maintain control and responsibility for your vehicle” while using it. " Nevertheless, this crash puts autonomous technologies and Tesla under some intense scrutiny. Source: Automotive News (Subscription Required), The Detroit News, Reuters, Tesla, The Verge -

The National Highway Transportation Safety Administration (NHTSA) has opened an investigation into a fatal crash dealing with Tesla's Autopilot system. In a statement given to Reuters, NHTSA said the driver of a 2015 Tesla Model S was killed while the vehicle was in the Autopilot mode. The crash took place on May 7th in Williston, Florida when a tractor-trailer was making a left turn across a divided highway. Tesla in a lengthy blog post said: "neither Autopilot nor the driver noticed the white side of the tractor trailer against a brightly lit sky, so the brake was not applied." The Model S drove underneath the trailer with the bottom making contact with the windshield. The Verge reports the driver was 40-year old Joshua Brown who filmed various videos of his Model S. One of the videos on his YouTube channel showed his Model avoiding an accident with a bucket truck. NHTSA's investigation will look the design and performance of the Model S and its various components, including Autopilot. It should be noted this is standard practice for NHTSA to investigate any crash where the vehicle's system could be at fault. Tesla's blog post says this is first known fatality in over 130 million miles since Autopilot was turned on. Autopilot has been a source of controversy since Tesla rolled it out last year. Numerous videos of Model S owners filming themselves in dangerous situations and sometimes showing the system not working caused Tesla to make some drastic changes. These included limiting the types of road the system could be turned on and making checks to see if there was someone sitting in the driver's seat. Tesla has said time and time again that Autopilot is a beta feature and that the driver needed to pay attention. "Autopilot is getting better all the time, but it is not perfect and still requires the driver to remain alert," Tesla said in their post. "It is important to note that Tesla disables Autopilot by default and requires explicit acknowledgement that the system is new technology and still in a public beta phase before it can be enabled. When drivers activate Autopilot, the acknowledgment box explains, among other things, that Autopilot “is an assist feature that requires you to keep your hands on the steering wheel at all times," and that "you need to maintain control and responsibility for your vehicle” while using it. " Nevertheless, this crash puts autonomous technologies and Tesla under some intense scrutiny. Source: Automotive News (Subscription Required), The Detroit News, Reuters, Tesla, The Verge View full article